Blog

What is Universality in LLMs? How to Find Universal Neurons

Understanding Universality in Large Language Models (LLMs)

Large Language Models (LLMs) have revolutionized the field of artificial intelligence by demonstrating an ability to process and generate human-like text. One of the intriguing aspects of LLMs is the concept of "universality." But what does universality mean in this context, and how can we identify universal neurons within these models? This blog post aims to delve into these topics, offering insights and practical guidance.

What Does Universality Mean in LLMs?

Universality refers to the capacity of a model to generalize its learnings across various tasks and domains. In essence, a universal LLM can solve diverse linguistic tasks without needing extensive retraining or task-specific fine-tuning. This capability is crucial as it allows for efficiency and adaptability in real-world applications.

The Importance of Universality

-

Versatility Across Domains: Universal LLMs can handle multiple data types, languages, and contexts, making them invaluable in fields like healthcare, customer service, and education.

-

Cost-Efficiency: By minimizing the need for customization, organizations can reduce operational costs associated with model training and deployment.

- Robustness: A universal approach enhances a model’s performance on unseen data, allowing it to address novel challenges effectively.

Characteristics of Universal Neurons

Within LLMs, certain neurons exhibit universal characteristics, allowing them to perform consistently across different tasks. Understanding and identifying these neurons can enhance our comprehension of model behavior and improve outcomes.

Key Traits of Universal Neurons

-

Task Independence: Universal neurons maintain a level of performance regardless of the specific task they are assigned. For instance, a neuron that recognizes sentiment can effectively analyze emotional context in varied sentences, whether in reviews, conversations, or academic articles.

-

Composite Functionality: These neurons often contribute to multiple functions, such as providing context, sentiment analysis, and language translation. Consequently, they play a pivotal role in the versatility of LLMs.

- Transferability: Insights derived from universal neurons tend to be applicable across various scenarios. Learning through one task can yield benefits in another, highlighting the interconnected nature of knowledge within the model.

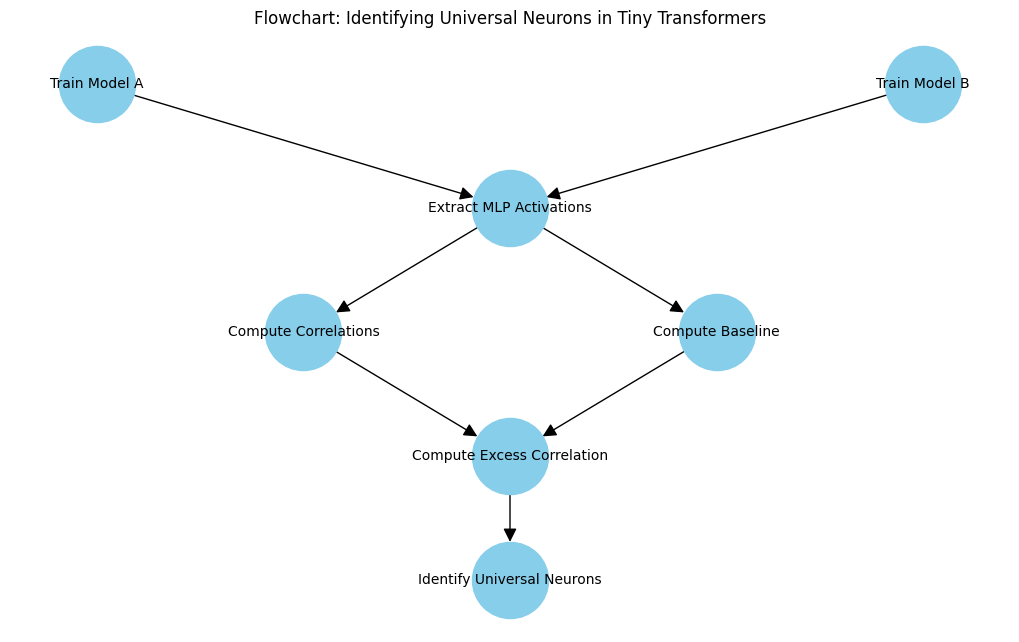

Techniques for Identifying Universal Neurons

Finding universal neurons can be complex, but various methodologies can simplify the process. Here are some effective techniques used in research and practical scenarios.

1. Analyzing Attention Mechanisms

Attention mechanisms are central to the functioning of LLMs. By examining which neurons become activated in response to specific inputs, researchers can identify their roles and significance. Shifts in attention patterns may indicate the involvement of universal neurons in different contexts.

2. Ablation Studies

Ablation studies involve systematically disabling specific neurons or layers to observe the effects on model performance. By determining how the removal of particular neurons influences outcomes across a set of tasks, researchers can infer which neurons are integral to universality.

3. Cross-Task Performance Evaluation

Evaluating the performance of specific neurons across multiple tasks can reveal their universality. For example, if a neuron consistently contributes to high accuracy in various assessments, it’s likely a universal neuron.

4. Activation Patterns

Investigating activation patterns throughout different training phases can also shed light on neuron utility. Neurons that display high activation rates across diverse datasets are strong candidates for universality.

Applications of Universal Neurons

Understanding and leveraging universal neurons can significantly impact the deployment of LLMs in various applications, offering enhanced efficiency and performance.

1. Enhanced Natural Language Processing (NLP)

In the field of NLP, universal neurons can improve task completion rates in applications like chatbots, translation services, and content generation. Their adaptability allows for richer and more nuanced interactions.

2. Advanced Sentiment Analysis

Universal neurons can enhance sentiment analysis tools by allowing for deeper understanding of context and nuance in language. This is particularly beneficial in fields such as marketing and customer feedback analysis.

3. Robust Machine Learning Systems

Integrating insights from universal neurons into machine learning systems can lead to the creation of stronger models that adapt to new data and challenges with ease. This adaptability is critical in rapidly changing environments.

Challenges and Considerations

While the concept of universality offers exciting prospects, it also presents several challenges that require careful consideration.

Data Limitations

The availability and diversity of data can significantly affect the identification and utility of universal neurons. Limited or biased datasets may lead to skewed results, reducing the effectiveness of LLMs across various applications.

Model Complexity

The intricate structure of LLMs makes it more challenging to pinpoint universal neurons. A comprehensive understanding of neural interactions and functions is necessary to draw accurate conclusions.

Ethical Considerations

As with any AI system, ethical implications need to be addressed. Ensuring fairness and accountability in universal neurons is vital, as biases may propagate through the model, impacting outcomes across applications.

The Future of Universality in LLMs

As research advances, the quest to understand and exploit universal neurons will continue. Emerging techniques such as explainable AI (XAI) may further elucidate the workings of these models, granting deeper insights into their structure and function.

Continuous Learning

With ongoing advancements in model training and architecture, Continuous Learning (CL) practices will likely enhance the identification and roles of universal neurons, making them even more integral to performance and adaptability.

Community Collaboration

Involving the broader data science and artificial intelligence communities in exploring universality can lead to more robust findings. Collaborative research can enhance understanding and drive innovation in model development.

Conclusion

Universality in large language models represents a fascinating aspect of artificial intelligence, enabling models to perform across various tasks with a remarkable degree of competence. Identifying universal neurons through techniques such as attention analysis, ablation studies, and cross-task performance evaluations can significantly inform and enhance AI applications. As we navigate the challenges and ethical considerations associated with this compounding complexity, a collaborative approach and ongoing research will help unlock the full potential of universality in LLMs.