Blog

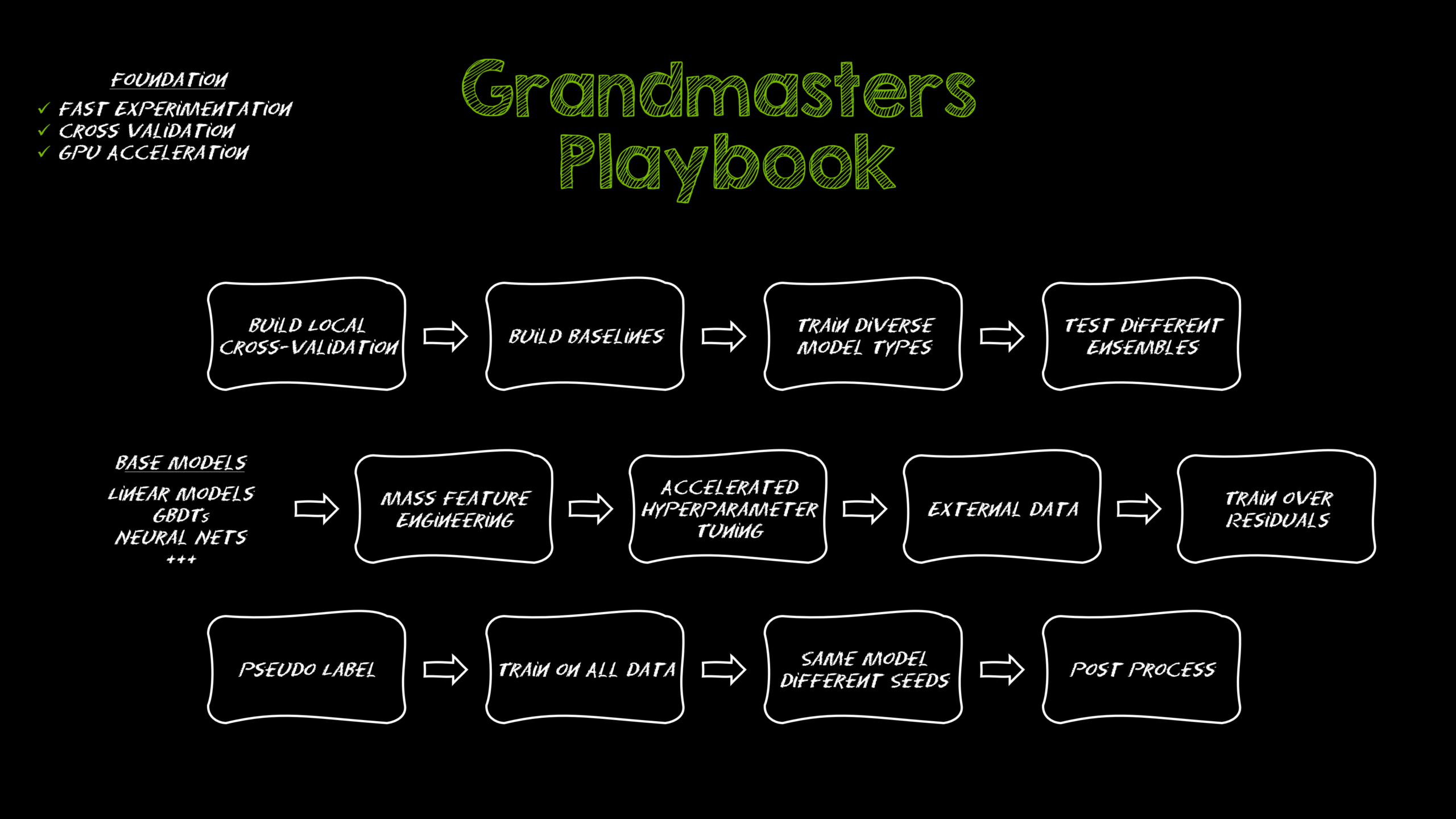

The Kaggle Grandmasters Playbook: 7 Battle-Tested Modeling Techniques for Tabular Data

Introduction

The world of data science has seen exponential growth, and platforms like Kaggle have become vital for enthusiasts and professionals alike. Among the community, Kaggle Grandmasters stand out due to their remarkable achievements in competitive data modeling. In this post, we will explore seven proven modeling techniques that can elevate your tabular data processing skills to new heights. Whether you’re a beginner or an experienced data scientist, these strategies will enhance your ability to extract meaningful insights from your datasets.

Understanding Tabular Data

Before diving into modeling techniques, it’s essential to grasp what tabular data is. Tabular data is structured in rows and columns, much like a spreadsheet. Each row represents a record, while each column signifies a feature. This format is common in various applications, ranging from financial data to healthcare metrics. Understanding the structure is a foundational step toward effective modeling.

1. Feature Engineering: The Art of Creating Variables

Feature engineering involves selecting, modifying, or creating new variables to improve model predictive performance. It’s one of the most critical steps in any data science project. Techniques such as binning, normalization, and one-hot encoding can significantly impact your model’s accuracy.

Binning

Binning involves converting numerical variables into categorical ones. For example, instead of using age as a continuous variable, you can classify it into groups like "18-24," "25-34," etc. This transformation captures non-linear relationships and enhances model performance.

Normalization

Normalization scales numerical features to a standard range, often between 0 and 1. This step is essential when working with algorithms that are sensitive to the scale of data, such as neural networks.

2. Model Selection: The Right Tool for the Job

Choosing the right model is crucial for achieving optimal results. Each algorithm has its strengths and weaknesses, making it essential to align the model with the problem at hand.

Common Algorithms

- Linear Regression: Ideal for problems with a linear relationship.

- Decision Trees: Useful for capturing non-linear relationships while providing interpretability.

- Random Forests: An ensemble method that improves accuracy by averaging multiple decision trees.

- Gradient Boosting Machines (GBM): Effective for structured data, GBMs can enhance predictive performance by sequentially improving weak models.

Evaluating Models

Utilize techniques like cross-validation to assess model performance reliably. This practice helps ensure that your findings are robust and not overly biased by any particular subset of the data.

3. Hyperparameter Tuning: Optimizing Performance

After selecting a model, the next step is hyperparameter tuning. Hyperparameters are settings that dictate how your model learns from data. Fine-tuning these parameters can lead to significant performance gains.

Techniques for Tuning

- Grid Search: Explore a predefined set of hyperparameters to find the best combination.

- Random Search: A random selection of hyperparameters can often yield better results with less computational expense.

4. Handling Missing Values: Data Integrity Matters

Missing values are a common issue in tabular datasets. How you handle them can greatly influence your model’s performance. There are various techniques for addressing this challenge.

Imputation Methods

- Mean/Median Imputation: Filling missing values with the mean or median of the existing values.

- K-Nearest Neighbors (KNN): A more sophisticated technique where missing values are predicted based on similar records.

Deleting Missing Data

Sometimes, it may be beneficial to drop records or features with missing values if they constitute a small percentage of the dataset. However, ensure this doesn’t lead to loss of critical information.

5. Cross-Validation: Ensuring Robustness

Cross-validation is a powerful technique for verifying the stability and reliability of your model. It involves splitting your dataset into several parts, training the model on one subset, and validating it on another.

K-Fold Cross-Validation

In this method, the dataset is divided into ‘k’ subsets. The model trains on ‘k-1’ of these subsets and validates on the remaining one. This process is repeated ‘k’ times, allowing each subset to serve as a validation set once. This rigorous testing helps prevent overfitting.

6. Ensemble Methods: Combining Models for Better Predictions

Ensemble methods leverage the strengths of multiple models to enhance predictive accuracy. By combining different approaches, you can reduce bias and variance, leading to a more robust solution.

Bagging and Boosting

- Bagging: Short for Bootstrap Aggregating, this technique improves stability by training multiple versions of a model on different samples of the data and averaging their predictions.

- Boosting: This sequential technique focuses on correcting the errors made by the prior models, effectively improving overall performance.

7. Feature Importance: Understanding Your Data

Understanding which features significantly impact your model’s predictions is crucial. Various techniques can help assess feature importance, enabling you to focus on the most influential variables.

Techniques for Assessing Feature Importance

- Permutation Importance: Measures the effect of shuffling a feature on model performance.

- SHAP Values (SHapley Additive exPlanations): Provide insights into how features contribute to individual predictions, enabling better interpretability.

Conclusion

Mastering the art of data modeling, particularly for tabular data, requires a blend of skill, experience, and the right techniques. The seven strategies discussed here—feature engineering, model selection, hyperparameter tuning, handling missing values, cross-validation, ensemble methods, and understanding feature importance—serve as a comprehensive framework to enhance your modeling effectiveness. By implementing these battle-tested techniques, you can significantly improve your predictive analytics and gain deeper insights from your data.

Whether you’re participating in competitions on platforms like Kaggle or undertaking your projects, these strategies will empower you to navigate the complexities of data science with confidence.

Elementor Pro

In stock

PixelYourSite Pro

In stock

Rank Math Pro

In stock

Related posts

Building a WordPress Plugin | Jon learns to code with AI

How to add custom Javascript code to WordPress website

6 Best FREE WordPress Contact Form Plugins In 2025!

Solve Puzzles to Silence Alarms and Boost Alertness

Conheça AI do WordPress para construção de sites

WordPress vs Shopify: The Ultimate Comparison for Online Store Owners | Shopify Tutorial

Apple Ends iCloud Support for iOS 10, macOS Sierra on Sept 15, 2025

How to Speed up WordPress Website using AI 🔥(RapidLoad AI Plugin Review)

Bringing AI Agents Into Any UI: The AG-UI Protocol for Real-Time, Structured Agent–Frontend Streams

Web Hosting vs WordPress Web Hosting | The Difference May Break Your Site

Google Lays Off 200+ AI Contractors Amid Unionization Disputes

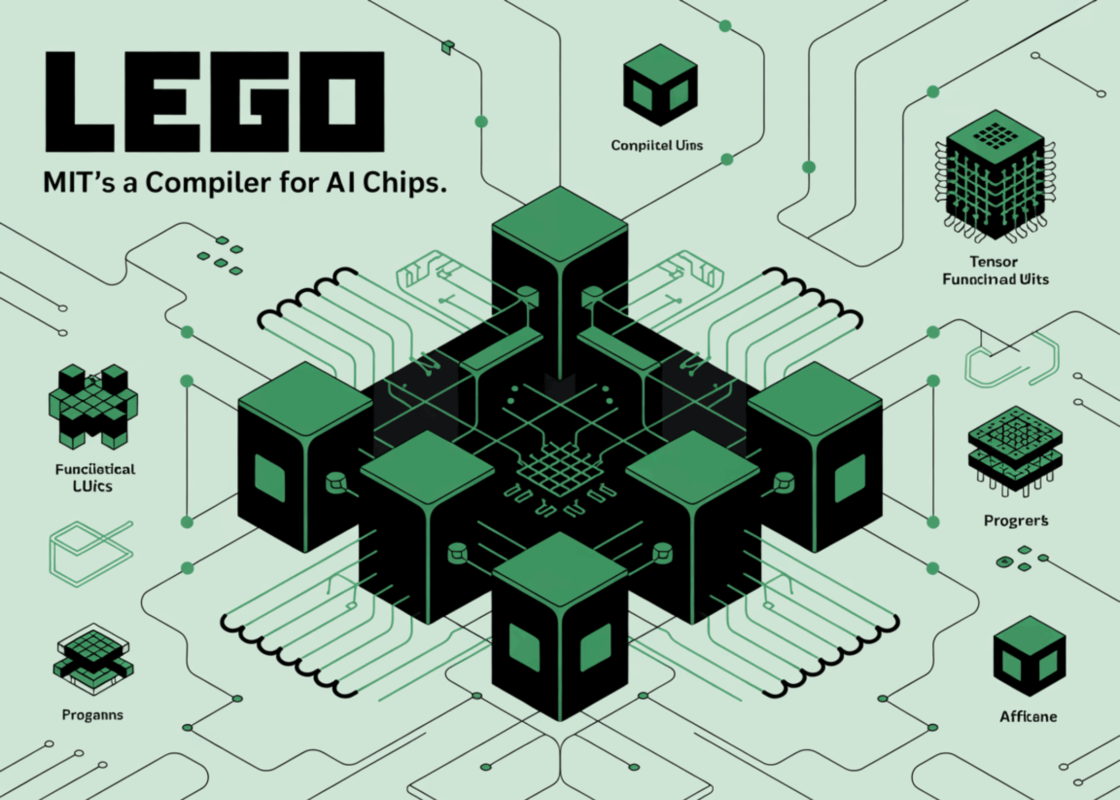

MIT’s LEGO: A Compiler for AI Chips that Auto-Generates Fast, Efficient Spatial Accelerators

Products

-

Rayzi : Live streaming, PK Battel, Multi Live, Voice Chat Room, Beauty Filter with Admin Panel

Rayzi : Live streaming, PK Battel, Multi Live, Voice Chat Room, Beauty Filter with Admin Panel

$98.40Original price was: $98.40.$34.44Current price is: $34.44.In stock

-

Team Showcase – WordPress Plugin

Team Showcase – WordPress Plugin

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

ChatBot for WooCommerce – Retargeting, Exit Intent, Abandoned Cart, Facebook Live Chat – WoowBot

ChatBot for WooCommerce – Retargeting, Exit Intent, Abandoned Cart, Facebook Live Chat – WoowBot

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

FOX – Currency Switcher Professional for WooCommerce

FOX – Currency Switcher Professional for WooCommerce

$41.00Original price was: $41.00.$4.02Current price is: $4.02.In stock

-

WooCommerce Attach Me!

WooCommerce Attach Me!

$41.00Original price was: $41.00.$4.02Current price is: $4.02.In stock

-

Magic Post Thumbnail Pro

Magic Post Thumbnail Pro

$53.71Original price was: $53.71.$3.69Current price is: $3.69.In stock

-

Bus Ticket Booking with Seat Reservation PRO

Bus Ticket Booking with Seat Reservation PRO

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

GiveWP + Addons

GiveWP + Addons

$53.71Original price was: $53.71.$3.85Current price is: $3.85.In stock

-

JetBlog – Blogging Package for Elementor Page Builder

JetBlog – Blogging Package for Elementor Page Builder

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

ACF Views Pro

ACF Views Pro

$62.73Original price was: $62.73.$3.94Current price is: $3.94.In stock

-

Kadence Theme Pro

Kadence Theme Pro

$53.71Original price was: $53.71.$3.69Current price is: $3.69.In stock

-

LoginPress Pro

LoginPress Pro

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

ElementsKit – Addons for Elementor

ElementsKit – Addons for Elementor

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

CartBounty Pro – Save and recover abandoned carts for WooCommerce

CartBounty Pro – Save and recover abandoned carts for WooCommerce

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Checkout Field Editor and Manager for WooCommerce Pro

Checkout Field Editor and Manager for WooCommerce Pro

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Social Auto Poster

Social Auto Poster

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Vitepos Pro

Vitepos Pro

$53.71Original price was: $53.71.$12.30Current price is: $12.30.In stock

-

Digits : WordPress Mobile Number Signup and Login

Digits : WordPress Mobile Number Signup and Login

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

JetEngine For Elementor

JetEngine For Elementor

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

BookingPress Pro – Appointment Booking plugin

BookingPress Pro – Appointment Booking plugin

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Polylang Pro

Polylang Pro

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

All-in-One WP Migration Unlimited Extension

All-in-One WP Migration Unlimited Extension

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Slider Revolution Responsive WordPress Plugin

Slider Revolution Responsive WordPress Plugin

$53.71Original price was: $53.71.$4.51Current price is: $4.51.In stock

-

Advanced Custom Fields (ACF) Pro

Advanced Custom Fields (ACF) Pro

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Gillion | Multi-Concept Blog/Magazine & Shop WordPress AMP Theme

Rated 4.60 out of 5

Gillion | Multi-Concept Blog/Magazine & Shop WordPress AMP Theme

Rated 4.60 out of 5$53.71Original price was: $53.71.$5.00Current price is: $5.00.In stock

-

Eidmart | Digital Marketplace WordPress Theme

Rated 4.70 out of 5

Eidmart | Digital Marketplace WordPress Theme

Rated 4.70 out of 5$53.71Original price was: $53.71.$5.00Current price is: $5.00.In stock

-

Phox - Hosting WordPress & WHMCS Theme

Rated 4.89 out of 5

Phox - Hosting WordPress & WHMCS Theme

Rated 4.89 out of 5$53.71Original price was: $53.71.$5.17Current price is: $5.17.In stock

-

Cuinare - Multivendor Restaurant WordPress Theme

Rated 4.14 out of 5

Cuinare - Multivendor Restaurant WordPress Theme

Rated 4.14 out of 5$53.71Original price was: $53.71.$5.17Current price is: $5.17.In stock

-

Eikra - Education WordPress Theme

Rated 4.60 out of 5

Eikra - Education WordPress Theme

Rated 4.60 out of 5$62.73Original price was: $62.73.$5.08Current price is: $5.08.In stock

-

Tripgo - Tour Booking WordPress Theme

Rated 5.00 out of 5

Tripgo - Tour Booking WordPress Theme

Rated 5.00 out of 5$53.71Original price was: $53.71.$4.76Current price is: $4.76.In stock