Blog

Fighting Back Against Attacks in Federated Learning

Understanding Federated Learning

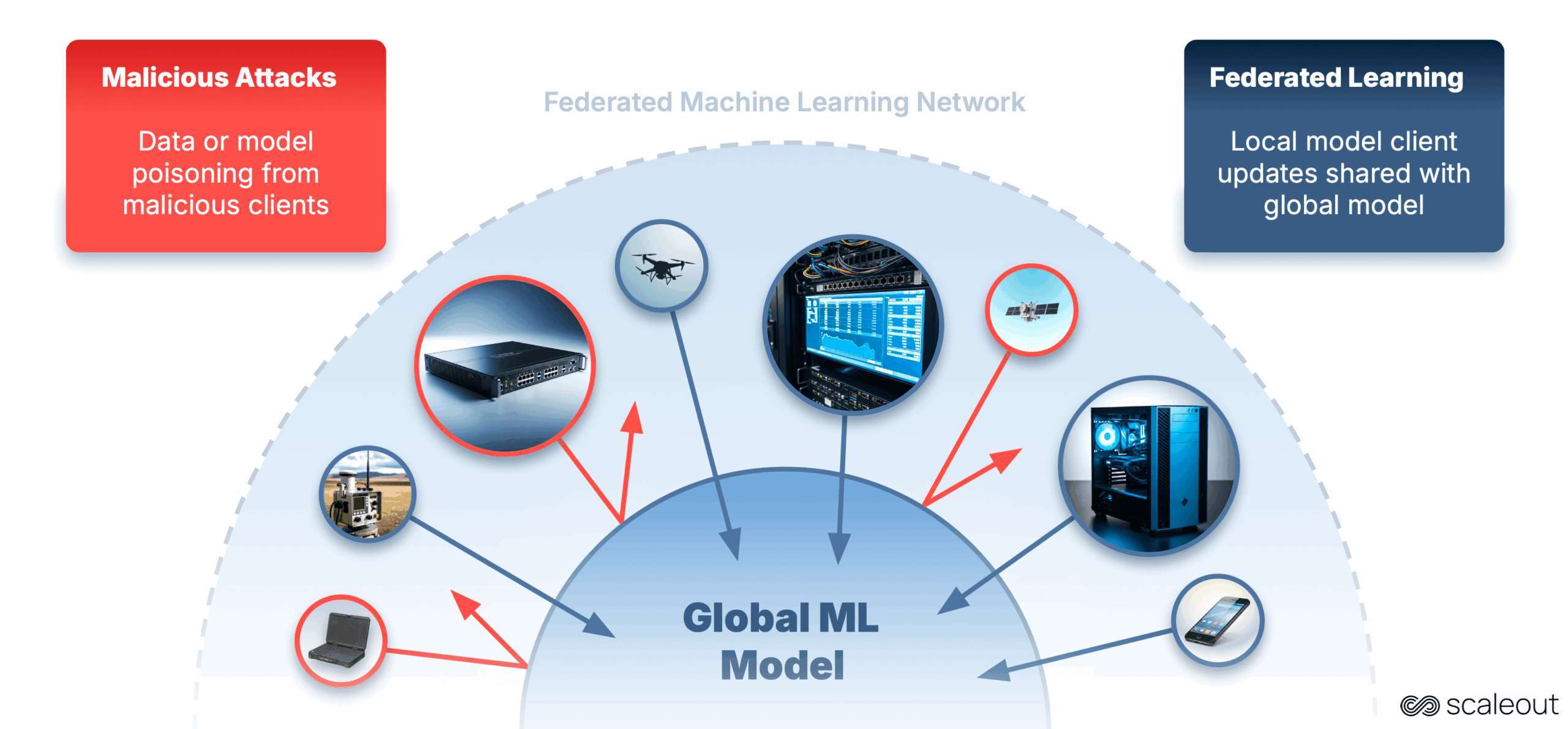

In the realm of artificial intelligence and machine learning, federated learning has emerged as a game-changing approach. Instead of pooling sensitive data into a central repository, federated learning allows machine learning models to be trained across multiple decentralized devices. This method provides robust privacy protections while still harnessing vast amounts of data to improve model accuracy.

The Necessity of Security in Federated Learning

As with any technology, federated learning is not immune to threats. Given the distributed nature of this approach, various attacks can compromise both the integrity of the model and the privacy of the data. Cybersecurity must be a fundamental consideration in the design and implementation of federated learning systems.

Common Types of Attacks

1. Model Inversion Attacks

One prevalent attack in federated learning is the model inversion attack. Here, an adversary attempts to reconstruct sensitive individual data by analyzing the model updates sent to the server. This can lead to serious privacy breaches, as adversaries might extract personally identifiable information from seemingly innocuous model updates.

2. Poisoning Attacks

Another significant threat is poisoning attacks, where a malicious participant injects false or misleading model updates. This can degrade the model’s performance, leading to biased predictions and ultimately undermining the utility of the federated learning system.

3. Eavesdropping Attacks

Eavesdropping attacks occur when an outsider monitors the communication between the devices and the central server. By capturing model updates, attackers can potentially glean insights into the underlying data and exploit vulnerabilities.

Strategies for Mitigating Risks

1. Enhanced Model Update Encryption

To protect against eavesdropping, implementing end-to-end encryption for model updates is essential. This ensures that even if an attacker intercepts the communication, they will not be able to decipher the data.

2. Differential Privacy Techniques

Incorporating differential privacy techniques can minimize the risks associated with model inversion attacks. By adding noise to the model updates, individual data points become indistinguishable, thus enhancing user privacy.

3. Robust Aggregation Algorithms

Using advanced aggregation methods can help identify and mitigate the impact of poisoning attacks. Algorithms that weigh contributions based on reliability can reduce the influence of malicious updates on the overall model.

Continuous Monitoring and Evaluation

To maintain the integrity of federated learning systems, continuous monitoring is crucial. Regular evaluations can help identify anomalies in model performances and detect potential attacks. This proactive approach allows for quick responses to emerging threats.

Building a Trustworthy Federation

Creating a trustworthy federated learning environment is vital for its success. This involves establishing clear protocols around data-sharing and consent, ensuring that all participating entities adhere to strict security standards. Education and awareness among participants about potential risks are equally important.

Advantages of a Secure Federated Learning System

1. Enhanced Privacy Protection

By integrating robust security measures, federated learning can provide users with greater confidence that their personal data will remain private. This is crucial in sectors such as healthcare and finance, where sensitive information is often involved.

2. Improved Data Utilization

Secure federated learning systems enable organizations to utilize a broader range of data without compromising data privacy. This can lead to the development of more accurate and effective machine learning models.

3. Strengthened Trust and Collaboration

A secure environment fosters trust among participants, encouraging collaboration and data sharing. When users feel confident in the security of their data, they are more likely to contribute to federated learning initiatives.

Future Directions in Federated Learning Security

As federated learning continues to evolve, so too must the security frameworks that support it. Ongoing research and innovation in machine learning security will play a pivotal role in adapting to emerging threats. Here are some anticipated future directions:

1. Advanced AI Surveillance

Leveraging artificial intelligence for threat detection can enhance the security measures in federated learning. Intelligent algorithms can analyze patterns and recognize unusual behavior, aiding in the swift identification of potential attacks.

2. Federated Learning with Blockchain Technology

Integrating blockchain technology with federated learning could add an extra layer of security. The decentralized nature of blockchain can ensure that data integrity is maintained throughout the learning process, making it difficult for attackers to alter model updates.

3. Standardization of Security Protocols

Establishing industry-wide standards for security in federated learning will provide a consistent framework for organizations to follow. This standardization can help promote best practices and streamline the implementation of security measures.

Conclusion

The potential of federated learning is immense, yet it comes with its own set of challenges, particularly regarding security. Addressing these challenges through robust security protocols and continuous evaluation is crucial for the successful implementation of federated learning systems. By prioritizing security, organizations can harness the power of decentralized machine learning while ensuring the integrity and privacy of their data. As technology advances, the focus on creating secure and efficient federated learning environments will become increasingly imperative. By staying ahead of threats, organizations can foster innovation while safeguarding sensitive information.