Blog

Best-in-Class Multimodal RAG: How the Llama 3.2 NeMo Retriever Embedding Model Boosts Pipeline Accuracy

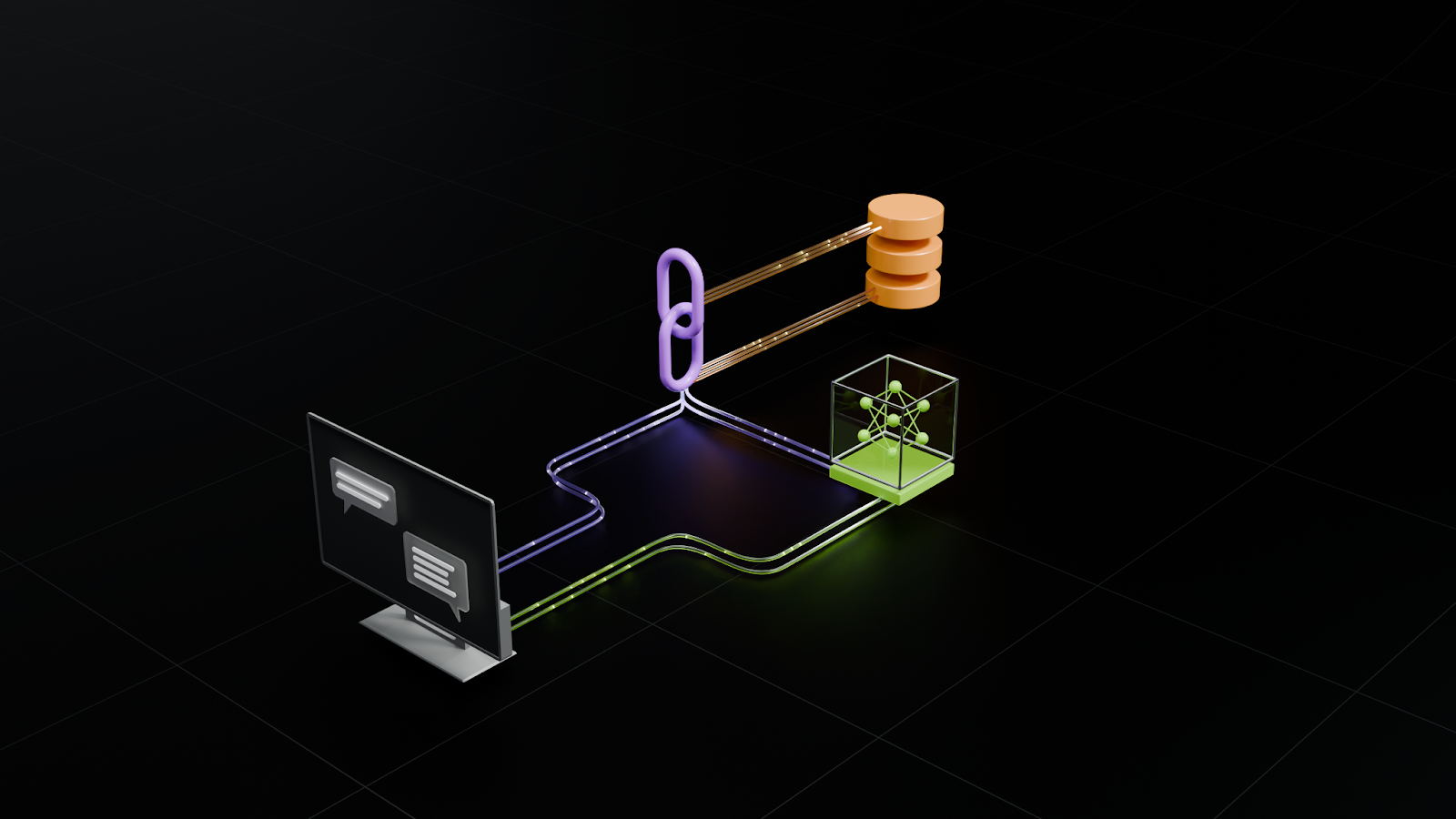

Introduction to Multimodal Retrieval-Augmented Generation (RAG)

In the rapidly evolving landscape of artificial intelligence, the integration of multimodal capabilities has emerged as a game-changer in enhancing machine learning models. One standout development in this realm is the Llama 3.2 NeMo Retriever embedding model. Designed to improve accuracy in various applications, this model harnesses the power of retrieval-augmented generation (RAG) techniques. This article explores how the Llama 3.2 NeMo Retriever elevates pipeline accuracy across diverse use cases.

Understanding Retrieval-Augmented Generation (RAG)

What is RAG?

Retrieval-Augmented Generation (RAG) combines the strengths of information retrieval and text generation. This strategy empowers systems to retrieve relevant information from large datasets before generating responses, ensuring that the output is not only contextually relevant but also grounded in factual data. The hybrid approach of RAG models can significantly enhance the performance of applications that require generating text based on vast amounts of information.

The Role of Multimodal Models

Multimodal models, like Llama 3.2, take RAG a step further by integrating various forms of data, such as text, images, and audio. By leveraging these diverse data types, multimodal models can generate richer and more engaging outputs. This capability is crucial in sectors that rely on multiple data formats, such as healthcare, education, and entertainment.

Introducing Llama 3.2 NeMo Retriever Embedding Model

Key Features of Llama 3.2

Llama 3.2 represents a significant advancement in embedding models. Its architecture is designed to improve the retrieval accuracy, making it particularly effective in complex datasets. Here are some of its standout features:

-

Enhanced Retrieval Accuracy: The model employs advanced algorithms that enhance the precision of information retrieval. This results in more accurate context for generated responses.

-

Scalability: Designed to handle vast datasets, Llama 3.2 can scale with increasing data complexity without sacrificing performance.

-

Flexibility: It supports various data types and formats, making it versatile for different applications—from academic research to customer service.

- User-Friendly Integration: Llama 3.2 is designed to integrate seamlessly into existing workflows, minimizing disruption and maximizing efficiency.

Technical Architecture

The Llama 3.2 NeMo Retriever embodies a sophisticated architecture that consists of multiple layers, ensuring efficient processing of information. Its unique embedding techniques assign semantic meaning to data points, enabling the model to recognize relevance more accurately than traditional systems.

How Llama 3.2 Boosts Pipeline Accuracy

Improved Contextual Understanding

One of the critical factors that contribute to the accuracy of the Llama 3.2 model is its ability to comprehend context. By combining retrieval techniques with generative capabilities, the model ensures that the generated outputs are grounded in relevant and precise information. This enhances both the reliability and the quality of the responses produced.

Streamlined Workflows

The integration of Llama 3.2 in various workflows can lead to significant streamlining of processes. For instance, businesses that utilize Llama 3.2 for customer support can expect quicker and more accurate responses to customer inquiries. By accessing a comprehensive knowledge base, the model can identify relevant answers and generate appropriate responses in real time.

Multimodal Applications

The versatility of the Llama 3.2 model extends to its application across different modalities. In healthcare, for example, it can analyze medical records (text), imaging data (like X-rays), and even audio patient interactions to generate comprehensive reports or treatment suggestions. This multimodal capability adds layers of depth and insight, making it invaluable in decision-making processes.

Real-World Applications of Llama 3.2

Customer Support and Service

With Llama 3.2, businesses can enhance their customer support operations. By utilizing the model to provide prompt and relevant responses, companies can improve user satisfaction. The model’s retrieval capabilities ensure that agents have access to the most pertinent information, leading to more effective resolutions.

Content Creation

Content creators can also benefit from the Llama 3.2 model. It allows for quick access to a wealth of information, enabling writers and marketers to generate high-quality content efficiently. By harnessing RAG techniques, the model assists in producing content that is not only engaging but also factually accurate.

Education and Research Enhancement

In educational settings, Llama 3.2 can support personalized learning by adjusting content delivery based on each student’s needs. By retrieving tailored resources and constructing relevant narratives, educators can enhance student engagement. Researchers can leverage the model for literature reviews and summarizing findings, thus expediting their work.

Challenges and Considerations

Data Quality

Although the Llama 3.2 NeMo Retriever enhances accuracy, the model’s effectiveness is still contingent on the quality of the underlying data. Poor quality or biased data can lead to incorrect outputs, emphasizing the importance of rigorous data curation and validation.

Ethical Implications

As with any AI technology, ethical considerations must be taken into account. Transparency, accountability, and bias mitigation are crucial in ensuring that the Llama 3.2 model is used responsibly.

Conclusion

The Llama 3.2 NeMo Retriever embedding model represents a significant advancement in the capabilities of multimodal RAG systems. With features that enhance retrieval accuracy, scalability, and contextual understanding, this model is poised to revolutionize applications in a variety of fields. By seamlessly integrating into workflows and leveraging multiple data types, Llama 3.2 not only boosts pipeline accuracy but also opens new avenues for innovation and development in AI. As organizations continue to explore the potential of this advanced technology, the future promises exciting possibilities in enhancing user experiences and driving efficiency across countless applications.