Blog

Understanding Gradient Boosted Trees

Gradient Boosted Trees (GBT) are a powerful machine learning technique commonly used for regression and classification tasks. They build predictive models by combining the outputs of weak learners, typically decision trees, in an iterative manner. To harness the full potential of GBT, proper tuning of its hyperparameters is essential.

What are Hyperparameters?

Hyperparameters are settings that govern the machine learning model’s structure and performance. Unlike parameters, which the model learns from data, hyperparameters must be set before training begins. In the context of GBT, tuning these settings can make a significant difference in model accuracy and performance.

Key Hyperparameters to Tune

When working with Gradient Boosted Trees, several hyperparameters play crucial roles. Understanding and adjusting these can optimize your model effectively.

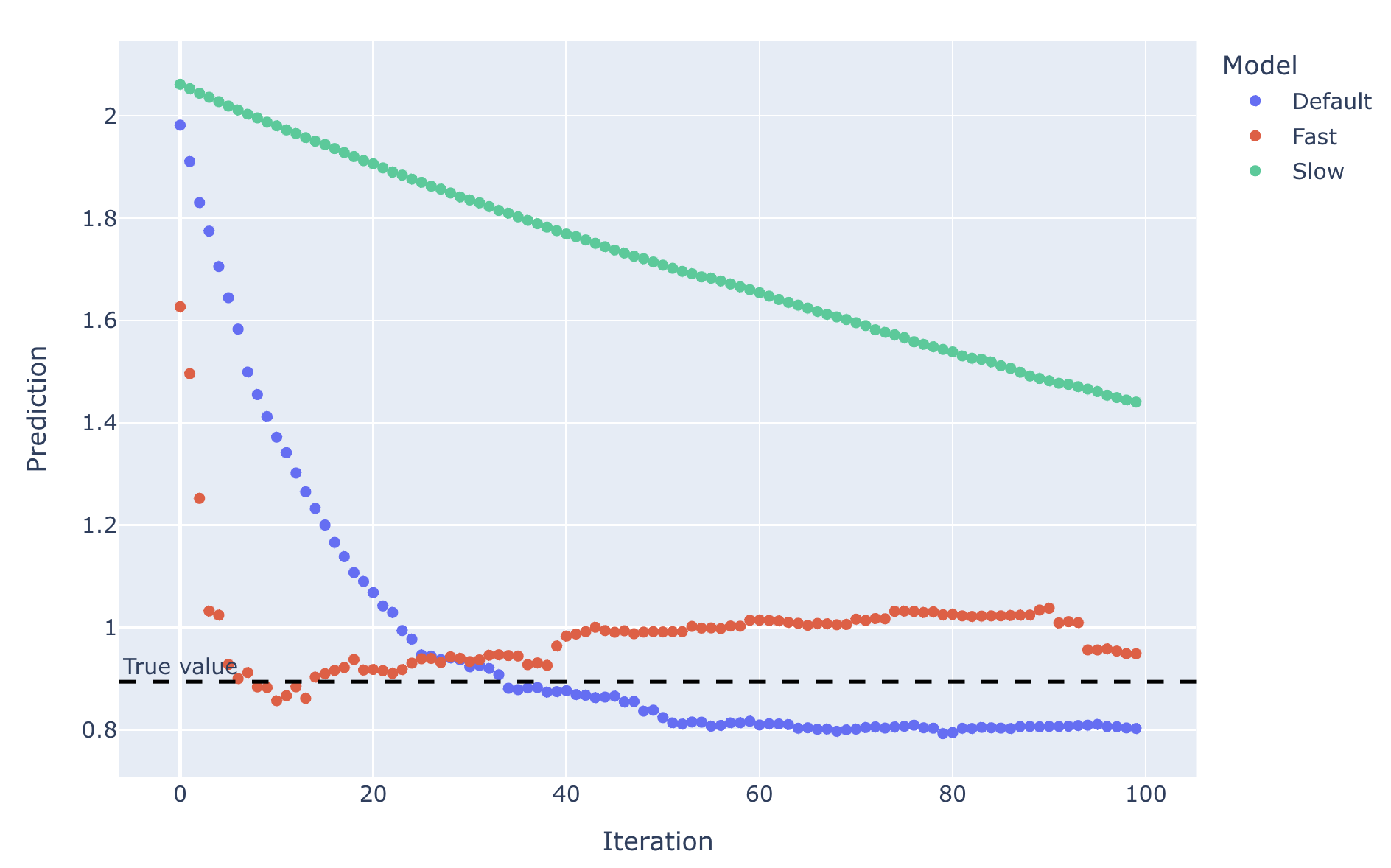

1. Learning Rate

The learning rate controls how much the model changes with respect to the error each time it updates. A smaller learning rate means more iterations will be needed, but it can lead to higher accuracy. Conversely, a larger learning rate allows the model to learn faster but may result in overshooting the optimal solution.

Tips for Setting Learning Rate:

- Start with a moderate value like 0.1.

- Experiment with smaller values (e.g., 0.01) for more refined learning.

2. Number of Estimators

The number of estimators represents the total number of trees in the model. A greater number of trees can lead to better performance but may increase the risk of overfitting.

Strategy for Number of Estimators:

- Use cross-validation to find a balance between model complexity and overfitting.

- Monitor performance metrics as you increase the number of trees.

3. Maximum Depth of Trees

The maximum depth limits the number of splits in each tree. Deeper trees can model more complex data but can also overfit.

How to Optimize Depth:

- Test various depths, starting from shallow values (3-5) to deeper structures (10-15).

- Utilize techniques like grid search for optimal depth selection.

4. Minimum Samples Split

This parameter dictates the minimum number of samples required to split a node. Setting a higher value can help in preventing overfitting by ensuring splits only occur with enough data points.

Recommendations:

- Begin with the default of 2 and increase gradually.

- Evaluate the effect on the model’s generalization ability.

5. Minimum Samples Leaf

The minimum samples leaf setting controls the minimum number of samples that must be present in a leaf node. Increasing this number can help ensure that the trees do not become too sensitive to noise in the dataset.

Exploring Leaf Node Size:

- Try values like 1, 5, or even higher, depending on the dataset size.

Regularization Techniques

To prevent overfitting, it’s crucial to apply regularization techniques in GBT models. Two common forms include:

1. L1 Regularization (Lasso)

L1 regularization adds a penalty equal to the absolute value of the magnitude of coefficients. This can lead to sparse models, where irrelevant features may effectively be eliminated.

2. L2 Regularization (Ridge)

L2 regularization applies a penalty equal to the square of coefficients. It helps in managing multicollinearity and enhances model stability.

Advantages of Regularization:

- Reduces model complexity.

- Improves predictive performance on unseen data.

Evaluating Performance

Once you have tuned your model’s hyperparameters, the next step is to evaluate its performance. This can involve various metrics based on the type of problem you are tackling:

For Classification Tasks:

- Accuracy: Measures the overall correctness of the model.

- F1 Score: Balances precision and recall for a comprehensive performance evaluation.

- AUC-ROC: Assesses model performance across different threshold values.

For Regression Tasks:

- Mean Absolute Error (MAE): Offers insight into the average error between predicted and true values.

- Mean Squared Error (MSE): Highlights the average of the squares of the errors.

- R² Score: Indicates how well the regression predictions approximate the real data points.

Cross-Validation Techniques

Cross-validation is vital in ensuring that your model is robust and generalizable. Techniques like K-fold cross-validation divide your dataset into ‘K’ subsets, allowing for rigorous testing of the model’s performance.

Steps to Implement Cross-Validation:

- Split your dataset into K equal parts.

- Use K-1 parts for training and one for testing.

- Repeat this process K times, rotating the test set.

Using Grid Search for Hyperparameter Tuning

Grid search is an optimal method for systematically testing different hyperparameter combinations. This approach involves specifying a set of values for each hyperparameter and evaluating the model’s performance for every possible combination.

Steps for Implementing Grid Search:

- Define the hyperparameters and their respective value ranges.

- Train the model using each combination.

- Evaluate performance with cross-validation.

- Select the combination yielding the best score.

Conclusion

Tuning Gradient Boosted Trees can significantly impact their performance and predictive power. By focusing on key hyperparameters such as the learning rate, number of estimators, and tree depth, alongside regularization techniques and thorough evaluation processes, you can enhance your model’s accuracy and robustness. Implementing systematic strategies like grid search and cross-validation will further aid in achieving optimal results.

Mastering these elements can place you on the path to developing highly effective machine learning models using Gradient Boosted Trees, enabling you to tackle complex datasets with confidence.

Elementor Pro

In stock

PixelYourSite Pro

In stock

Rank Math Pro

In stock

Related posts

Fiverr Lays Off 30% of Workforce in AI-First Pivot

5 Strategic Steps to a Seamless AI Integration

Supercharge Headless WordPress Development with AI & Retrieval-Augmented Generation (RAG)

Hostinger Coupon Code 2025 – Hostinger Cloud Hosting, VPS Hosting, Web Hosting Discount Coupon Code

Corporate Philanthropy Evolves: High-Impact Strategies for 2025

Google AI Ships TimesFM-2.5: Smaller, Longer-Context Foundation Model That Now Leads GIFT-Eval (Zero-Shot Forecasting)

Hostinger Coupon Code 2025 – Hostinger Cloud Hosting, VPS Hosting, Web Hosting Discount Coupon Code

Adding A Chat Model to Automate Your WordPress Blog

Hostinger Coupon Code 2025 – Hostinger Cloud Hosting, VPS Hosting, Web Hosting Discount Coupon Code

Pulumi Launches Neo AI Agent for Natural Language Cloud Automation

The Lazy Data Scientist’s Guide to Time Series Forecasting

Hostinger Coupon Code 2025 – Hostinger Cloud Hosting, VPS Hosting, Web Hosting Discount Coupon Code

Products

-

Rayzi : Live streaming, PK Battel, Multi Live, Voice Chat Room, Beauty Filter with Admin Panel

Rayzi : Live streaming, PK Battel, Multi Live, Voice Chat Room, Beauty Filter with Admin Panel

$98.40Original price was: $98.40.$34.44Current price is: $34.44.In stock

-

Team Showcase – WordPress Plugin

Team Showcase – WordPress Plugin

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

ChatBot for WooCommerce – Retargeting, Exit Intent, Abandoned Cart, Facebook Live Chat – WoowBot

ChatBot for WooCommerce – Retargeting, Exit Intent, Abandoned Cart, Facebook Live Chat – WoowBot

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

FOX – Currency Switcher Professional for WooCommerce

FOX – Currency Switcher Professional for WooCommerce

$41.00Original price was: $41.00.$4.02Current price is: $4.02.In stock

-

WooCommerce Attach Me!

WooCommerce Attach Me!

$41.00Original price was: $41.00.$4.02Current price is: $4.02.In stock

-

Magic Post Thumbnail Pro

Magic Post Thumbnail Pro

$53.71Original price was: $53.71.$3.69Current price is: $3.69.In stock

-

Bus Ticket Booking with Seat Reservation PRO

Bus Ticket Booking with Seat Reservation PRO

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

GiveWP + Addons

GiveWP + Addons

$53.71Original price was: $53.71.$3.85Current price is: $3.85.In stock

-

ACF Views Pro

ACF Views Pro

$62.73Original price was: $62.73.$3.94Current price is: $3.94.In stock

-

Kadence Theme Pro

Kadence Theme Pro

$53.71Original price was: $53.71.$3.69Current price is: $3.69.In stock

-

LoginPress Pro

LoginPress Pro

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

ElementsKit – Addons for Elementor

ElementsKit – Addons for Elementor

$53.71Original price was: $53.71.$4.02Current price is: $4.02.In stock

-

CartBounty Pro – Save and recover abandoned carts for WooCommerce

CartBounty Pro – Save and recover abandoned carts for WooCommerce

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Checkout Field Editor and Manager for WooCommerce Pro

Checkout Field Editor and Manager for WooCommerce Pro

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Social Auto Poster

Social Auto Poster

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Vitepos Pro

Vitepos Pro

$53.71Original price was: $53.71.$12.30Current price is: $12.30.In stock

-

Digits : WordPress Mobile Number Signup and Login

Digits : WordPress Mobile Number Signup and Login

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

JetEngine For Elementor

JetEngine For Elementor

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

BookingPress Pro – Appointment Booking plugin

BookingPress Pro – Appointment Booking plugin

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Polylang Pro

Polylang Pro

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

All-in-One WP Migration Unlimited Extension

All-in-One WP Migration Unlimited Extension

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Slider Revolution Responsive WordPress Plugin

Slider Revolution Responsive WordPress Plugin

$53.71Original price was: $53.71.$4.51Current price is: $4.51.In stock

-

Advanced Custom Fields (ACF) Pro

Advanced Custom Fields (ACF) Pro

$53.71Original price was: $53.71.$3.94Current price is: $3.94.In stock

-

Gillion | Multi-Concept Blog/Magazine & Shop WordPress AMP Theme

Rated 4.60 out of 5

Gillion | Multi-Concept Blog/Magazine & Shop WordPress AMP Theme

Rated 4.60 out of 5$53.71Original price was: $53.71.$5.00Current price is: $5.00.In stock

-

Eidmart | Digital Marketplace WordPress Theme

Rated 4.70 out of 5

Eidmart | Digital Marketplace WordPress Theme

Rated 4.70 out of 5$53.71Original price was: $53.71.$5.00Current price is: $5.00.In stock

-

Phox - Hosting WordPress & WHMCS Theme

Rated 4.89 out of 5

Phox - Hosting WordPress & WHMCS Theme

Rated 4.89 out of 5$53.71Original price was: $53.71.$5.17Current price is: $5.17.In stock

-

Cuinare - Multivendor Restaurant WordPress Theme

Rated 4.14 out of 5

Cuinare - Multivendor Restaurant WordPress Theme

Rated 4.14 out of 5$53.71Original price was: $53.71.$5.17Current price is: $5.17.In stock

-

Eikra - Education WordPress Theme

Rated 4.60 out of 5

Eikra - Education WordPress Theme

Rated 4.60 out of 5$62.73Original price was: $62.73.$5.08Current price is: $5.08.In stock

-

Tripgo - Tour Booking WordPress Theme

Rated 5.00 out of 5

Tripgo - Tour Booking WordPress Theme

Rated 5.00 out of 5$53.71Original price was: $53.71.$4.76Current price is: $4.76.In stock

-

Subhan - Personal Portfolio/CV WordPress Theme

Rated 4.89 out of 5

Subhan - Personal Portfolio/CV WordPress Theme

Rated 4.89 out of 5$53.71Original price was: $53.71.$4.76Current price is: $4.76.In stock