Blog

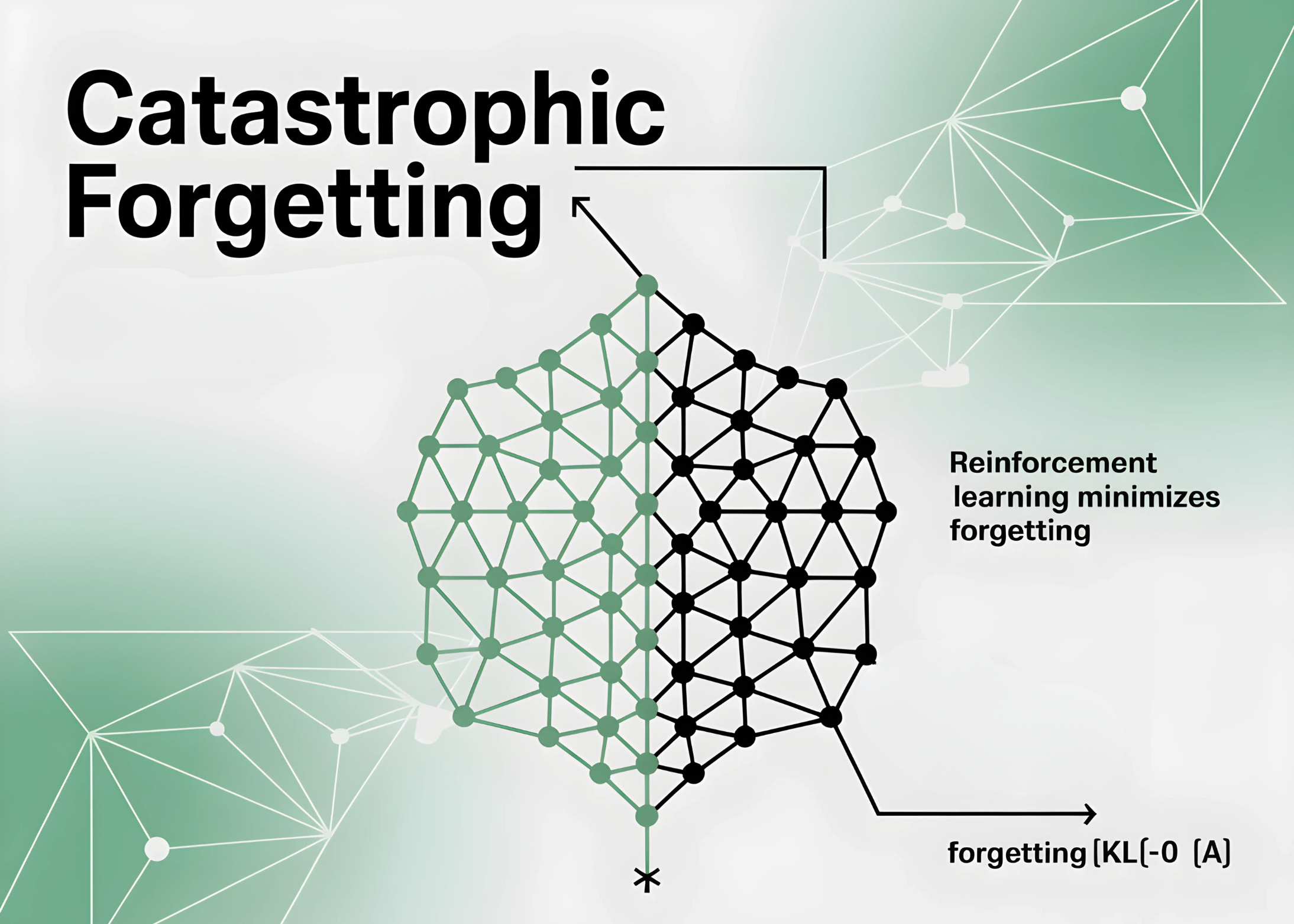

A New MIT Study Shows Reinforcement Learning Minimizes Catastrophic Forgetting Compared to Supervised Fine-Tuning

Understanding Catastrophic Forgetting in Machine Learning

Introduction to Machine Learning and Its Challenges

Machine learning has transformed many fields, making it possible for systems to learn from data and improve over time. However, one significant challenge revolves around a phenomenon known as catastrophic forgetting. This occurs when a model, having been trained on new information, suddenly loses knowledge of previously learned data. The implications of this issue are profound, impacting applications from natural language processing to robotic control.

The Basics of Catastrophic Forgetting

Catastrophic forgetting is most prevalent in artificial neural networks. When these networks are trained sequentially on different tasks, they often struggle to retain information from earlier tasks. This leads to diminished performance in those areas as the system adapts to new data. Understanding how to mitigate this problem is crucial for the development of more robust and effective machine learning models.

The Role of Reinforcement Learning

Reinforcement Learning (RL) is a branch of machine learning where an agent learns to make decisions by taking actions in an environment to maximize a reward signal. Unlike supervised learning, where models learn from a labeled dataset, reinforcement learning allows models to learn from interaction with their environment. This learning paradigm has shown promise in various areas, including gaming and robotics.

Recent Findings from MIT

A recent study conducted by researchers at the Massachusetts Institute of Technology (MIT) highlights the advantages of reinforcement learning in minimizing catastrophic forgetting. The research indicates that RL techniques can be more effective than traditional supervised fine-tuning approaches regarding knowledge retention.

Comparing Reinforcement Learning and Supervised Fine-Tuning

Understanding Supervised Fine-Tuning

Supervised fine-tuning is a popular method in machine learning. It usually involves training a pre-existing model on a new dataset, allowing it to adjust its parameters based on labeled data. While this method is effective for many applications, it has its drawbacks—most notably, the risk of catastrophic forgetting.

As new tasks are introduced, the model tends to overwrite its previous knowledge. This raises concerns when the model must maintain proficiency across a range of tasks, particularly in dynamic or evolving environments.

How Reinforcement Learning Minimizes Forgetting

The MIT study demonstrates that reinforcement learning can mitigate the risk of forgetting in a more effective manner. By continuously interacting with an environment, an RL model can update its knowledge base without discarding previous experiences. This adaptive approach allows the model to retain valuable information while also being flexible enough to learn new tasks.

-

Data Efficiency: RL frameworks often require fewer data points to learn effectively. By using feedback from the environment, these models can adjust their strategies without losing previously acquired knowledge.

- Dynamic Learning: The nature of reinforcement learning enables models to re-evaluate their experiences continually. As new information is integrated, older knowledge remains intact, promoting a more holistic understanding.

Implications for the Future of Machine Learning

The findings from the MIT study may reshape how researchers and practitioners approach problem-solving in machine learning. By leveraging the strengths of reinforcement learning, developers can create more robust systems that maintain performance across various tasks without suffering from catastrophic forgetting.

Applications Across Domains

The implications of this research extend across multiple areas:

-

Natural Language Processing: NLP models can benefit from RL techniques, enabling them to understand contextual nuances while retaining learned vocabulary or grammar rules.

-

Robotics: Robots often face a variety of tasks in real-world scenarios. Using RL, they can adapt to new tasks without forgetting previous capabilities, enhancing their performance and utility.

- Game AI: In gaming, an AI agent can improve its strategies while simultaneously ensuring that it retains effective tactics from past experiences.

Challenges and Limitations

While the advantages of reinforcement learning are profound, it is not without limitations. The study also identifies challenges in implementing RL methods effectively.

-

Complexity and Resource Intensity: Reinforcement learning can be more complex than supervised learning. Training times often increase, and resource allocation becomes vital for successful implementation.

- Learning Stability: The dynamic nature of reinforcement learning can sometimes lead to instability in learning. Ensuring that the model remains balanced and doesn’t overfit to new tasks without losing old knowledge requires careful management.

Moving Forward: Bridging Techniques

Rather than completely replacing traditional methods, the future of machine learning may lie in integrating various techniques. By combining the stability of supervised fine-tuning with the adaptability of reinforcement learning, researchers can create hybrid models that leverage the best of both worlds.

Conclusion

The MIT study provides critical insights into using reinforcement learning to effectively minimize catastrophic forgetting in neural networks. By understanding the differences between reinforcement learning and supervised fine-tuning, as well as the potential for hybrid approaches, researchers can pave the way for more resilient machine learning systems.

As the field evolves, these findings can significantly influence the development of intelligent systems capable of lifelong learning without the risk of losing previously acquired knowledge. Embracing these advancements will be essential for pushing the boundaries of what machine learning can achieve in various applications, making technologies more adaptive and robust as they confront complex real-world challenges.