Blog

Deploy Scalable AI Inference with NVIDIA NIM Operator 3.0.0

Introduction to NVIDIA NIM Operator 3.0.0

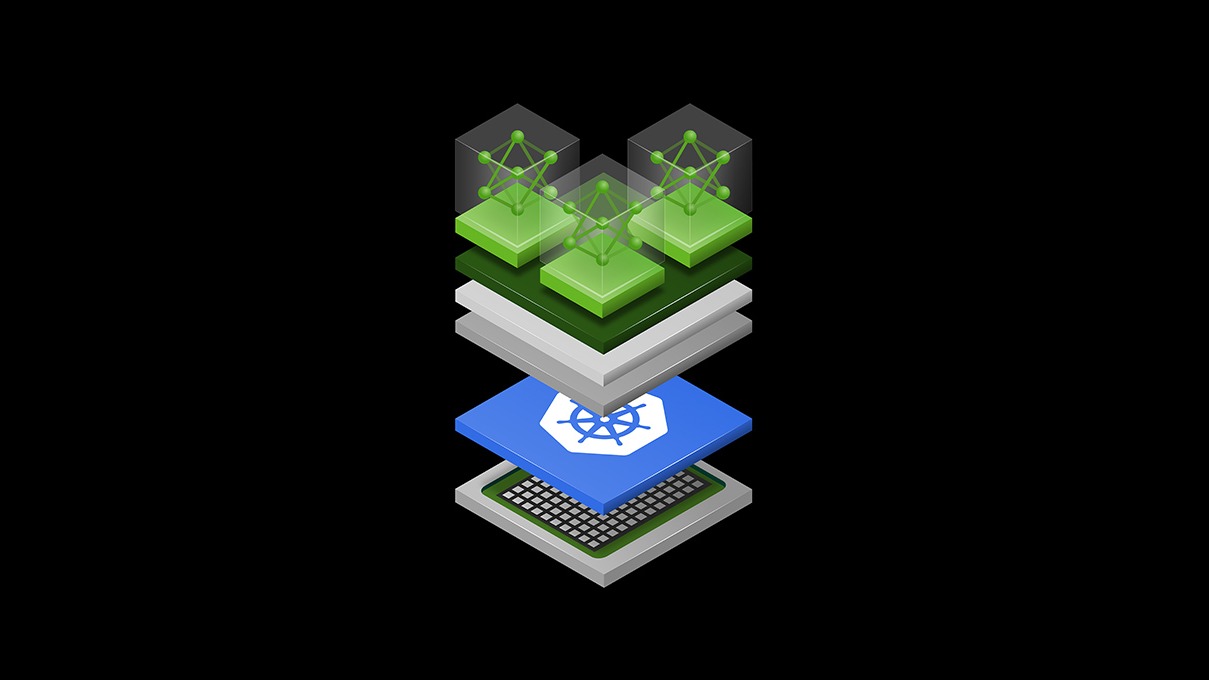

Artificial Intelligence (AI) continues to revolutionize industries by providing intelligent solutions that enhance efficiency and productivity. One of the key challenges in deploying AI applications is ensuring that the inference stage can scale appropriately to handle varying workloads. The NVIDIA NIM Operator 3.0.0 is designed to address this challenge, making it easier for organizations to deploy scalable AI inference on Kubernetes clusters.

What is NVIDIA NIM Operator?

NVIDIA NIM (NVIDIA Inference Management) Operator is an application that simplifies the deployment and management of AI models on Kubernetes. By facilitating a streamlined deployment process, it empowers data scientists and developers to focus on model development rather than infrastructure management.

Key Features of NIM Operator 3.0.0

Enhanced Scalability

The primary feature of NIM Operator 3.0.0 is its ability to scale AI inference tasks effortlessly. The operator allows users to adjust resource allocation dynamically depending on the workload demands. This means your deployments can efficiently manage varying traffic levels while maintaining performance.

Improved Resource Management

Managing AI inference infrastructure can be complex, especially at scale. The NVIDIA NIM Operator simplifies this by offering efficient resource allocation capabilities. Users can easily define resource requirements for their models, ensuring optimal performance while minimizing waste.

Support for Multiple Frameworks

NIM Operator 3.0.0 supports a variety of AI frameworks, including TensorFlow and PyTorch. This flexibility enables developers to use the tools they are already familiar with, streamlining the deployment of their models without needing to adapt to a new framework.

Deployment Simplified

Kubernetes Integration

NIM Operator is built to integrate seamlessly with Kubernetes, a popular container orchestration platform. This integration allows for automated deployment, scaling, and management of AI applications, reducing operational complexities.

User-Friendly Interface

The operator comes with a user-friendly interface that facilitates easier management of AI models. This dashboard enables users to monitor performance, manage resources, and deploy updates with minimal effort—allowing teams to concentrate on enhancing their models rather than wrestling with deployment logistics.

Performance Monitoring and Optimization

Real-Time Insights

Another crucial aspect of the NVIDIA NIM Operator is its robust performance monitoring capabilities. Users gain access to real-time insights, allowing for better understanding and optimization of model performance. Identifying bottlenecks and resource usage patterns becomes straightforward, enabling data scientists to make data-driven decisions.

Automated Scaling

With the ability to set scaling parameters based on performance metrics, AI applications can adapt in real time. This ensures that the inference remains responsive and efficient, even during peak loads. The automatic scaling feature significantly reduces the risks of downtime and resource underutilization.

Use Cases for NIM Operator 3.0.0

E-Commerce

In the e-commerce sector, personalized recommendations play a significant role in enhancing customer experiences. Using NVIDIA NIM Operator, businesses can deploy AI models that predict user behavior and preferences at scale, ensuring smooth operations during traffic spikes, such as during sales events.

Healthcare

AI is increasingly being used in healthcare for diagnostics and patient management. The scalability of NVIDIA NIM Operator allows healthcare providers to deploy inference models quickly, enabling real-time data analysis—leading to faster and more accurate patient care.

Autonomous Vehicles

For companies working on autonomous vehicles, AI inference is critical for decision-making in real-time. NIM Operator 3.0.0 allows for rapid deployment and scaling of complex models, ensuring that vehicles can interpret and respond to their environments with minimal latency.

Best Practices for Deploying with NIM Operator

Define Resource Allocation Clearly

Before deploying, it’s vital to clearly define the resource needs for your models. Specify CPU, GPU, and memory requirements accurately to ensure optimal performance and to avoid resource wastage.

Monitor Performance Regularly

Utilize the provided monitoring tools to keep track of your model’s performance. Regular performance reviews will help you identify areas needing optimization and allow proactive adjustments.

Leverage Automated Scaling

Make the most of the automatic scaling features. Set appropriate performance thresholds to enable your models to scale dynamically based on real-time demand, enhancing efficiency while reducing idle resources.

Conclusion

The deployment of scalable AI inference has never been more accessible, thanks to NVIDIA NIM Operator 3.0.0. With its seamless Kubernetes integration, enhanced scalability, and real-time performance monitoring, organizations now have a powerful tool to manage their AI applications effectively. As businesses continue to seek innovative solutions, leveraging tools like NIM Operator will be critical for staying competitive in a rapidly evolving landscape.

By adopting this state-of-the-art technology, organizations can focus on refining their AI models and delivering impactful business outcomes.