Blog

Implementing DeepSpeed for Scalable Transformers: Advanced Training with Gradient Checkpointing and Parallelism

Implementing DeepSpeed for Scalable Transformers: Enhancing Training with Gradient Checkpointing and Parallelism

## Introduction

In the realm of artificial intelligence and deep learning, the demand for scalable models is at an all-time high. Transformers, particularly, have been pivotal in advancing natural language processing tasks. However, training these models can be resource-intensive, often requiring significant compute resources. This is where DeepSpeed shines. It’s an efficient library designed to streamline the training of large models, enabling faster performance while optimizing memory usage.

## What is DeepSpeed?

Developed by Microsoft, DeepSpeed is an open-source deep learning optimization library that significantly enhances the performance of training large-scale models. It offers a suite of advanced features, such as mixed precision training, gradient accumulation, and, most notably, gradient checkpointing. These features collectively allow for training larger models than what traditional methods would permit, making it an invaluable tool for researchers and practitioners alike.

## Key Features of DeepSpeed

### 1. Efficient Memory Utilization

One of the standout features of DeepSpeed is its memory optimization capabilities. By using various techniques like zero redundancy optimizer (ZeRO), it ensures that memory consumption is minimized without sacrificing performance. This means you can train bigger models on smaller hardware.

### 2. Gradient Checkpointing

Gradient checkpointing is a remarkable feature that saves memory by storing only a subset of the intermediate activations during forward propagation. When the backward pass is executed, these activations are recomputed rather than stored, thus reducing the memory footprint substantially. This technique allows users to train deeper models without overloading their GPUs.

### 3. Mixed Precision Training

DeepSpeed supports mixed-precision training, which combines 16-bit and 32-bit floating-point computations. This approach enables faster computation and lower memory usage, resulting in quicker training times while maintaining model accuracy.

### 4. Advanced Parallelism

DeepSpeed introduces advanced parallelism techniques that allow for seamless distribution of training workloads across multiple GPUs. This capability not only accelerates training but also improves scalability, enabling organizations to utilize their computational resources more effectively.

## Getting Started with DeepSpeed

### Prerequisites

Before diving into the implementation, ensure you have the following prerequisites:

– A compatible GPU (NVIDIA A100, V100, etc.)

– Python (version 3.6 or higher)

– PyTorch (properly installed)

– DeepSpeed library (which can be installed via pip)

### Installation

You can install DeepSpeed using pip with the following command:

bash

pip install deepspeed

### Basic Configuration

The first step in using DeepSpeed is to configure your model. This involves creating a configuration JSON file that outlines your training parameters, including batch size, learning rate, and other optimization settings.

json

{

“train_batch_size”: 32,

“gradient_accumulation_steps”: 1,

“fp16”: {

“enabled”: true

},

“zero_optimization”: {

“stage”: 2

}

}

This example includes options for mixed precision and ZeRO optimization at stage 2, which is recommended for high-performance training.

## Implementing Gradient Checkpointing

### Step 1: Import Necessary Libraries

To implement gradient checkpointing, first import the required libraries:

python

import torch

from torch import nn

import deepspeed

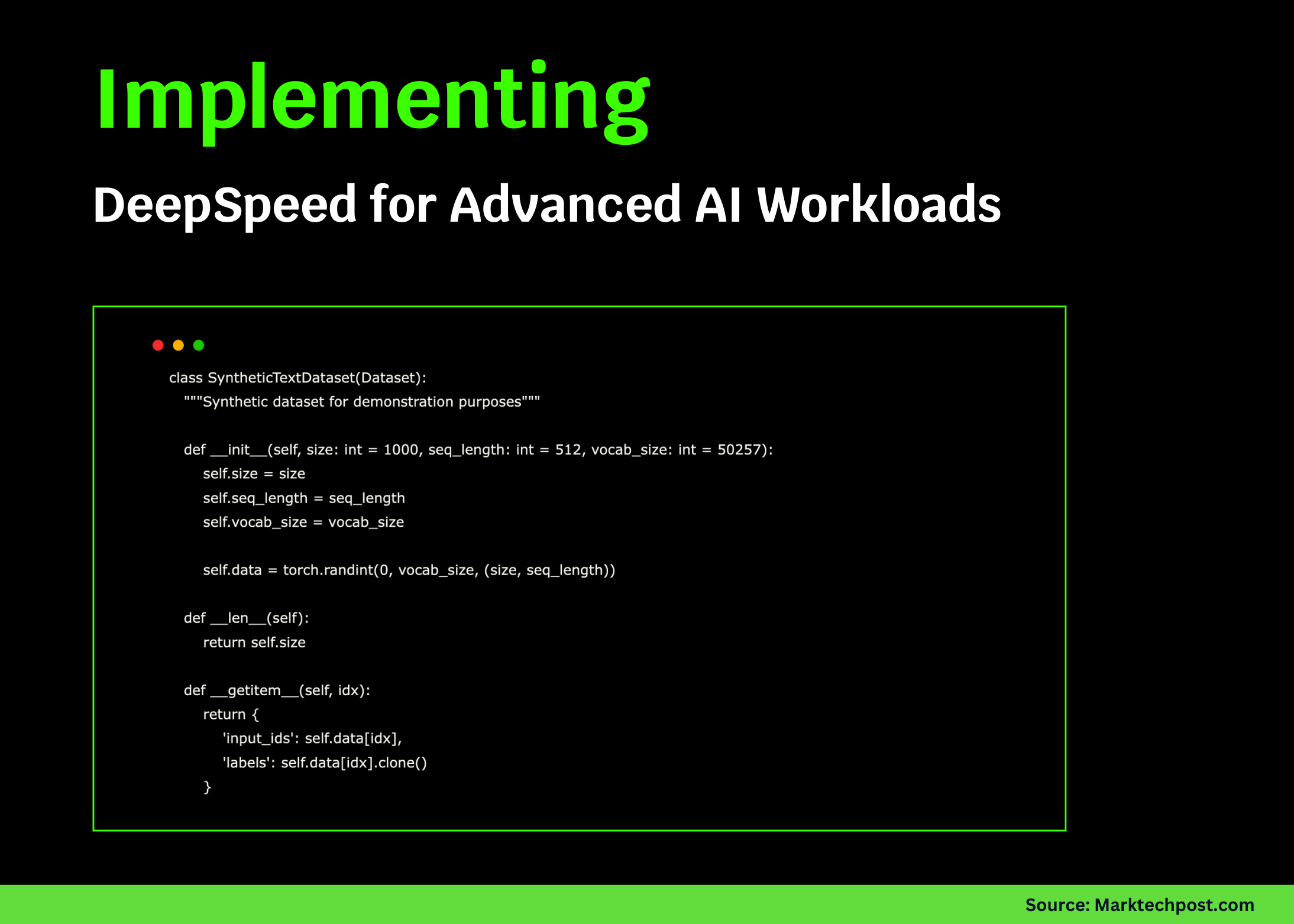

### Step 2: Create Your Model

Define your transformer model. Ensure that the forward method can handle gradient checkpointing:

python

class MyTransformerModel(nn.Module):

def __init__(self):

super(MyTransformerModel, self).__init__()

# Define the model layers

def forward(self, x):

# Implement forward pass handling checkpointing

return torch.utils.checkpoint.checkpoint(self.model_layers, x)

### Step 3: Initialize DeepSpeed

When initializing your model with DeepSpeed, pass in the configuration file:

python

model = MyTransformerModel()

model_engine, optimizer, _, _ = deepspeed.initialize(

args=deepspeed_args,

model=model,

optimizer=optimizer

)

## Leveraging Advanced Parallelism

### Data Parallelism

DeepSpeed supports data parallelism, enabling you to distribute the training workload among multiple GPUs. You can achieve this with a few simple modifications:

python

# In the configuration JSON under “zero_optimization”

“zero_optimization”: {

“stage”: 2,

“overlapping_comm”: true

}

This setting allows for overlapping communication with computation, drastically improving training times.

### Model Parallelism

For ultra-large models that exceed GPU memory capacity, model parallelism is essential. Split the model across GPUs, allowing each device to handle a portion of the computations. DeepSpeed makes this process seamless.

## Monitoring and Optimization

### Performance Monitoring

Utilize built-in logging functionalities to monitor your model’s performance during training. This includes tracking metrics like loss and accuracy, enabling you to make real-time adjustments.

### Hyperparameter Tuning

Fine-tuning hyperparameters is crucial for achieving optimal performance. Experiment with learning rates, batch sizes, and model configurations, taking advantage of DeepSpeed’s capability to manage large parameter sets efficiently.

## Conclusion

In summary, implementing DeepSpeed for training scalable transformers is not only feasible but also significantly enhances the training efficiency of these large models. With features like gradient checkpointing and advanced parallelism, DeepSpeed empowers practitioners to push the boundaries of what’s possible in deep learning. By optimizing memory, accelerating training times, and allowing for the use of larger models, DeepSpeed is transforming the landscape of artificial intelligence research and development.

Incorporating these techniques into your workflows can lead to groundbreaking advancements in your projects, driving forward innovation in the AI space. With the right tools, the potential is limitless.